A Case Against Mind-Brain Type Identity

A small demurral of mind-brain identity theory as assumed within the widely accepted cognitive neuroscience.

Today, with the help of the following critical review and further resources (Critical Review of Mind-Brain Identity Theory), we will be discussing limitations of the cognitive scheme and mind-brain type identity theory. We will begin with various discussions branching from this review paper, and include some of my own personal arguments.

In future articles, I will be attempting to minimise the number of concepts and focus on specific issues. For direction, the purpose of this and the following articles will be to provide food for thought on a continued argument against the computational theory of mind and representationalism, which will come to its climax when we discuss a Bergsonian theory of mind.

To begin with, I will provide some preliminary details within identity theory, to allow us to contextualise this debate.

Introduction to Identity Theory

Identity theory is a very broad topic, and we will be unable to discuss it in its entirety, however, we will be discussing a few rudimentary notions applicable to this discussion, many of which I have taken from John Searle’s Philosophy of Mind lecture series on YouTube. This is the first episode to get the reader started (although it is not at all necessary).

First, let us define what is meant by identity, what ontological stuff we are identifying, and in what sense are we using this in our discussion.

The roots of identity theory trace far back, but its Indo-European origins are placed in the hands of Leibniz, with his identity of indiscernibles. Effectively, this states that two objects can be considered identical if they share the same properties. This has raised many issues, including its modal status, so is this a necessary or sufficient condition for identicality? But even more strongly contested is what exactly is meant by an object and an abstract property.

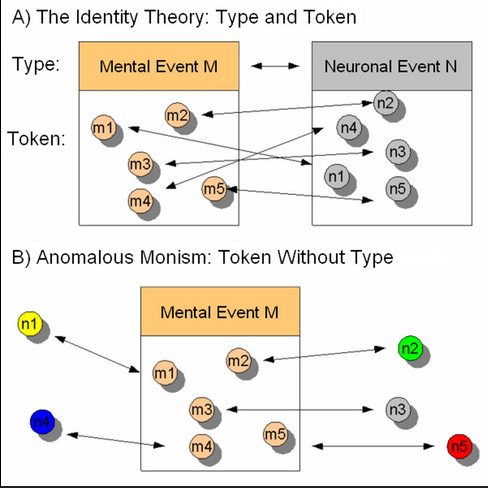

This issue we discuss far more in detail in explication of Bergsonian metaphysics. However, we can now follow this chain of thought into the notion of types and tokens. A type, in identity theory, refers to a particular category of object or event, by contrast, a token can be viewed as an instance of a type.

Philosophers of Mind originally posited a type-type identity theory for brain and CNS (central nervous system) states. Type-type essentially means that particular types of mental events would be identical to particular types of brain states. Or to phrase more concretely, Mental Event type M is identical with Neuronal Event Type N.

This emerged in the mid-20th century in response to behaviourism, the view that we would effectively be unable to study the mind directly as it requires a non-empirically based inductive leap of faith, considering mental states are entirely private (at least; this is the commonly held belief).

Of course, this is a very surface level analysis of behaviorism more generally (that only observable behaviour is focused on), and we should consider that this is analytic behaviorism, a tradition rooted in logical positivism, a position which we have described and argued against in previous articles.

The relevance of type-type identity theory to analytic behaviourism is that it allowed something directly observable and seemingly causal in human behaviour (neuronal states) to be studied directly, and thus provided a physicalist, empirical basis for studying the mind, or so they believed.

Token-token identity theory was later posited to remediate issues with multiple realisability, which is the ability for a mental event to be implemented on multiple different types of physical processes. It essentially solves this problem by considering each mental event instance A to be associated with some neuronal event instance A’, and is agnostic to the concrete structure or function (and thus the type) of the neuron or broader neuronal architecture supporting the mental event instance. This abstraction is in fact one of the most damaging mistakes to befall the discipline of brain study, since we now have not cared to focus on the concrete physical dynamics of the brain beyond the network level, which I believe holds key insights into its true function.

The origins of the conception of multiple realisability is from the computational metaphor, that is, the position that mental processes can be considered as programs implemented on hardware, which of course have this multiple realisability property as long as the hardware is considered as supporting an implementation of an abstract machine, in most cases being the Universal Turing Machine.

In the case of cognitive neuroscience this would be cognitive functions implemented in connectionist networks of neurons in the brain. These, when abstracted into a neural network (the mathematical model of the neuron), are computationally universal to some degree of approximation, and thus are essentially each equivalent to a Universal Turing Machine, which is what makes the computational metaphor so appealing. I have left out the technical specifics of what is meant by computational universality.

The computational metaphor is the default position in cognitive neuroscience for this reason (and also as it allows for a simpler scientific process for studying cognition; see John Searle #3 for more) and conditions the vast majority of research efforts in the field, including understanding of memory (a topic we will dive into later in this article).

A better reason to have seriously consider token-token identity theory over type-type identity theory is the issue of modality as it relates to understanding animal cognition. One must concede that animals have totally incomprehensible mental states (in that they are very different from human mental states) in a type-type identity theory as the CNS and general neuronal architecture is very different. In a token-token identity theory, we can say that physical states are contingent (but not necessary) on particular mental states, which is important since animals have vastly different pain reception architectures (as a particular example) in contrast to humans, having far less cutaneous C-type fibers (correlated in perceiving excruciating pain).

Anomalous Monism is a theory posited by Donald Davidson which extends the token identity theory with an argument based on the apparent non-existence or at least empirically unverifiable psychophysical laws. Three principles are made which are justified to some extent: the interaction principle, the cause-law principle, and the anomalism principle.

The interaction principle states that mental events and physical events causally interact, such as thinking to scratch one’s head causes the lifting of one’s arm, and seeing rain may illicit thoughts of how to avoid getting wet. This seems fairly obvious. The cause-law principle states that all cause-effect relations must be covered by strict laws. The anomalism principle extends this by stating there are no strict laws on the basis of which mental events can predict, explain, or be predicted or explained by other events. These principles combine to form a token-token identity theory of mental states.

This theory is problematic for multiple reasons, and will get a quick treatment as we further deconstruct type-type identity theory in later sections. Firstly, it requires that physical theories are strictly causally closed, which has seen its course in the development of quantum mechanics, and the question of whether the wavefunction is ontic (that it actually exists). If it is, and thus potentials are reified, then this model of causation falls to pieces. Of course, one need not appeal to quantum mechanics, but instead look at the problem of change in general ((which we will cover in a future article) to see why the cause-law principle is grounded on a poor model of causation.

The Predominant View: Epiphenomenalism, Material Monism, Mind-Brain Type Identity

So now we introduce a few further concepts and terms which extend the ontological position taken in cognitive neuroscience on the nature of mental states. However, before we do this, some further historical context should be provided that demonstrates the significance of the developments of identity theory above, and why it can be even considered to be controversial or in dispute.

Firstly, we discuss a concept known as Cartesian Dualism, which the philosopher and scientist Rene Descartes posited in "Meditations on First Philosophy," first published in 1641. He made a distinction between the mind (res cogitans) and material bodies (res extensa), as distinct ontological substances. Mind is intensional, non-extended, and so forth. Matter is extensional, extended, occupies space, and so forth. The very short (and therefore void of many historical details) story is that this was utilised to justify a position known as material monism.

This position takes the substance dual and decides on the basis of Galileo’s reduction of Aristetolian metaphysics, the logical positivist tradition, and Karl Popper’s critical rationalism, that matter exists and mind is identical to a complex material process (brains in the case of neuroscience), on the basis of a token-token or type-type theory as we outlined above.

This Galilean reduction is a historical development which includes the separation of perception into primary and secondary affections, where spatial extensity and motion are seen as primary, and are completely objective, and colour as a particular example is seen as secondary, and is entirely subjective.

We have discussed logical positivism previously (the belief that we should only care about logical necessity and sensory experience in making truth claims).

Karl Popper’s falsification and verification paradigm we have discussed also. But to recap, it is a self-contained engine for scientific progress devoid of understanding the categories of thought it produces, by assuming particular truths (which Karl Popper calls ‘basic statements’), which then serve as premises that can allow a scientific theory to be reasonably proven false.

These all combine to form the materialist monism (or extended physicalism) ontological position that dominates all neuroscientific data interpretation and research focus.

Now we tackle our second new term, epiphenomenalism. Since we are continuing from the tradition of a Galilean reduction, then phenomena, being mind-dependent and secondary, must be considered to be a production of a material process. In the context of neuroscience, this is usually considered in an argument from strong emergence via complexity, where the unified subject of experience emerges out of the vast interconnectedness and processing power of neural networks. However, since the underlying mechanism is physical, the mental has no causal powers.

When combined with a deterministic model of causation, this seems to question the reason why experiential states should even be paired with physical brain states at all, since the physical system could continue to self-perpetuate and function without all of the suffering and joy and other internal fluff of the mind. This issue is made more lucid with the thought experiment of philosophical zombies, which we may discuss later in this article or in future editions, that are essentially bodies without consciousness, that are seemingly functionally equivalent to humans but have no conscious states.

As a side note, my intuition is that being able to imagine a functionally equivalent philosophical zombie without conscious states is an issued poorly posed with respect to time, and this point is inspired by a Bergsonian review I will be approaching in the future. The argument goes as follows.

Personal Argument (Philosophical Zombies are Incoherent in Time)

Conscious beings have functional brain states that evolve over time and self-organise (structure is informed) based on internal factors: free will decisions, emotional states, unconscious desires, and so forth. An arbitrary instant of time (‘encoding’ a slice of the brain) would capture an aggregate of functions within the body, where each pathway in the brain is always causally involved in something physically active in the body, whether that be motor action, homeostatic regulation and so on.

However, this spatiotemporally ‘sliced’ body now has no causal powers in of itself (i), and does not require mental states (ii).

(i) More concretely, this system has to be stimulated by something external that is causally real, which realises the activation of any particular pathway at the particular moment of time the body is sliced. This means that it is essentially passive and inert, very much like a philosophical zombie, an aggregate of ‘programs’ that can be use to generate particular bodily reactions and movements, particular regulatory activities such as immunosuppression, and so on. Further, this neural activation is a change which is enabled by an actualisation of a potential (the potential for the neural pathway to be activated), and is in fact a hierarchical causal series (to borrow some Aquinian terminology - relating to the classical theologian St Thomas Aquinas), thus must have derivative causal power.

This should be clear to see from the following example: that a particular bodily movement derives its causal power from the sustained activation of motor neuronal pathways, themselves from motor pathways in the brain, and so forth.

(ii) Of course, as a follow-up to (i), since the body in that slice already is ‘functionally determined’, that it requires no development, change or evolution to obtain functionality it does not already have, then there is no time for mental states to occur. This is a point which requires a Bergsonian backstory to justify completely, but at least is intuitively obvious since we consider impulse reactions (unconscious decisions) to be instantaneous, and largely that is how they are differentiated from conscious decisions.

Thus we note that a philosophical zombie is the result of reducing the human form to a null instant of time (that is an infinitesimal moment), and then considering how this system could function for itself in a totally abstract sense. However, it is nonsensical since the world we live in can never be divided ad infinitum to such a null instant, and all human beings are dynamical systems which evolve indivisibly through time, and their consciousness is characterised by such indivisible transformation. Which has been demonstrated intuitively by comparing the experiential difference between an impulse reaction and a conscious decision, and that will be made far more logically lucid in my review of Bergson.

The philosophical zombie (in my view) then cannot actually exist concretely in the world, and can only exist abstractly in a simulation. This zombie also requires to be programmed and modified by a true cognitive agent embedded in the concrete world, and would have no experience of its own, and no causal powers of its own.

Now, continuing from prior to my personal argument, the reader should know that the basic strong emergence from neural process complexity approach is no longer taken seriously, as we have clarified some of the necessary properties of experience, in particular the features of local and global binding. Although, there are still more outdated materialistic theories of consciousness, such as IIT, which focus primarily on ‘re-entrant loops’, a tradition dating back centuries where the experiencing subject is made equivalent with self-reflexive and self-referential information processing, a very contentious notion which we will be arguing against in future articles.

The focus has now shifted toward finding a classical integrator or binder of experience in the brain, across disparate sensory modalities, into a unified moment (in Joscha Bach’s take on computational functionalism for instance), in order to account for local and global binding. All of this will be discussed later, in particular in the section titled the Seat of Consciousness.

Further, the concept of strong emergence is problematic in of itself. By definition, strong emergence refers to properties or abilities of a system which is not present in its parts, or more concretely, a description of a formal system which is not found in the aggregate description of its subsystems. This is an issue especially when we are grounding a neuroscientific approach on a physical theory, since strong emergence questions the efficacy of causal closure in physics, which it relies on to make claims about type-type identity theory and many forms of the weaker token-token identity, as related to Donald Davidson’s anomalous monism (in particular the cause-law principle).

Still, the dominant position is that of material monism, consciousness as an epiphenomenon, and the mind being identified with neuronal processes (generally a mind-brain identity theory; unconcerned with whether this is token, type or anomalous). So now, with this knowledge, we will continue to some criticisms and alternatives.

Neurological Causation-Correlation Fallacies

Now that we have enlightened to some detail the metaphysical presuppositions supporting cognitive neuroscience, and their myriad issues, we will now disentangle what I believe to be one of the most aggregious blunders in all of science to date.

So, in short, what exactly do I refer to by a neurological causation-correlation fallacy? It is as simple as this, the belief that activations of neural networks alone are causal of particular mental states. Now of course, no one would argue against physical brain states being correlative to mental states, and many examples can be given. The most common example provided is that lesioning implies loss-of-function, for example, if Broca’s area (correlated in speech) is lesioned, then this leads to difficulty speaking. Further, neurochemistry is used to justify this correlation also, such as the subjective effects of caffeine rooted in adrenaline production and improved dopamine reuptake.

So, if this correlation is undeniable, what is the issue with making a causal claim of biophysical states of the brain on mental states? Well, this is a fallacy based on the fact that correlation is not a sufficiency criterion for causation, and I imagine this has been drilled into the minds of many of my readers, so I will try not to push this point for too long. The causation-correlation fallacy is an instance of a post-hoc fallacy, a class of fallacies where a preceding event is seen as a causal antecedent, or causally related to a following event. It is used all the time alongside verbs such as produce and generate, which essentially masks the logical inconsistency of the claim in attempting to appear as the obvious conclusion from empirical data.

Further, I find this to be a clear indicator of dogmatism, which is unfortunately abound in modern Western academia. Further, reinforcing this argumentation stifles any research effort in understanding other physical mechanisms in the brain outside of the classical connectionist network model. For example, it is now known that gamma synchrony and brainwave patterns are more closely coupled with conscious states, and these have more to do with resonance and coherence over multiple networks than the activity of individual networks per se.

So then, what may be an valid alternative? Well, a complete, concrete answer is something I will be actively developing and presenting throughout this series, in particular through first discussing Bergson, then applying a Bergsonian framework to perception (largely attributed to Stephen E Robbins with his modulated reconstructive wave), following by application of a Bergsonian framework to understanding organic learning and brain development.

However, the firm grounding and conceptual framework for the complete model will now be discussed in the next section.

An Equally Possible Position Opposing Material Monism; Filter Theory

Now, we will discuss a valid alternative position which follows the potential reasons behind a correlation between biophysical brain states and mental states. In patticular, wee will consider what could constitute a reason for a correlation between any pair of events, with two examples.

(i) Firstly, event A permits event B. In this case, event A creates the conditions that allow event B to occur. A doesn't directly cause B, but it makes B possible or more likely. This is sometimes referred to as an "enabling" relationship. So in this instance, permissivity would be related to transmission, that is the transmission of and enabling of the expression of a mental state in the body.

(ii) Secondly, we could invert the causal relation. Since we have a correlation, there is no reason to just consider that neuronal state A may be causal of mental state B, but the inverse is also equally possible, with some mental state A being causal of neuronal state B.

Now, to follow further on point (i) - we will consider the conceptual grounding provided by William James, a prodigious American philosopher and psychologist of the 19th century.

William James’ and Huxley’s Filter Theory

Now, to preface, this subsection will not suffice for a model of filtering. It will simply provide the logical background and frame our future attempts to provide a concrete explanation as to how such a theory of mind could work with our current knowledge of physical processes in the brain. As discussed prior; this will be made in conjunction with a Bergsonian metaphysics.

“My thesis is now this: that, when we think of the law that thought is a function of the brain, we are not required to think of productive function only; we are entitled also to consider permissive or transmissive function. And the ordinary psycho-physiologist leaves this out of his account” (William James, 1898)

James thought of the brain in terms of a bidirectional transducer theory.

He used the analogy of a prism separating white light into different colored beams. The optical medium modifies the refractive gradient that transduces light with a different chromatic dispersion. Thus the prism has a transmissive function, it cannot be said to generate coloured light.

The brain can be viewed in a similar fashion, and the particulars of this and what exactly in this context is the light being transduced into neural activity will be provided in a future article also related to point (ii), relating it to the work of the theologian Swedenborg, a particular article to be titled Swedenborg’s Resolution of Mind-Body Dualism.

“To make survival possible biologically, Mind at large has to be funneled through the reducing valve of the brain and nervous system” (Aldous Huxley, 1954)

Aldous Huxley was a contemporary of William James and extended his conceptual framework by incorporating ideas from Berkeley’s subjective idealism, which we will be covering at some point in the future.

From these perspectives, mind uses the brain as an instrument, as an interface of expression. It is equally as rationally justified to state that mental processes utilise some neuronal processes to achieve some goal, that is to act in the body. This also may provide an insight as to why some neuronal processes are largely conceived to be unconscious, and others contributing to experiental states.

The Seat of Consciousness: Integration, Phenomenological Binding

Now, we move onto the issue of the ‘seat of consciousness’, which we introduced the logical antecedent to in minor detail when we introduced local and global binding. These concepts are now made more concrete and used to demonstrate the ‘final stop’ of a materialistic conception of the mind.

Firstly, I shall quickly introduce the two main types of phenomenological binding as we have labelled above. Global binding refers to the fact that perception is unified, in particular that a moment of conscious experience is composed of a variety of features which present simultaneously. This likely has far more rich origins but was first presented within the Western philosophical tradition with Leibniz, who stated "whatever is not a true unity cannot give rise to perception” and "Each simple substance (monad) has relations that express all the others, and consequently, that each simple substance is a perpetual, living mirror of the universe".

The second quote, combining Leibniz’ monadology with the Bergson & Bohmian holographic field is something we will definitely be discussing in a future article, but now it is clear that the idea we are presenting is that consciousness can be considered to ‘bind’ multiple perceptual features, a diversity in a unity. This also corresponds to an issue outlined by William James known as the combination problem, which again is how many perceptual features are integrated into a single moment of experience.

Local binding is an even more specific term which refers to the issue of sensory features being attached to their source, for example, how does colour bind to its source (why doesn’t the greenness of the Granny Smith spill over the sides and compete with the redness of the Pink Lady in my fruit basket?). Now, local binding is in fact even more important for the combination problem, but it is not something we will be discussing too much in depth today, and we leave this for a future article.

However, the concept of global binding is something that has received its treatment in the materialistic conception of mind-brain identity. It is usually attempted to be resolved by finding the seat of consciousness, this is where distributed information processing across sensory modalities (i.e. visual and auditory cortices) is effectively integrated together into a single unified moment.

In particular, the theory of global workspace theory, and the approach of Joscha Bach with his classical integrator. The term ‘classical’ is important here as there is a notion for which binding could obtain a reasonable resolution with quantum effects (not to be confused with quantum information processing), which we will also be discussing in a future article, but these approaches focus on classical information processing (computations on classical bits with respect to action potentials in individual neurons).

One major issue which should be discussed in this future edition, will be of the problem of classical integration speed, which seems to be insufficient for binding. Although not following this point, there are other issues which are outlined in the following article, in particular as it relates to information integration seeming unnecessary for many unisensory experiences (https://pmc.ncbi.nlm.nih.gov/articles/PMC5082728/).

Another example is the following (taken from our review article):

“Crick and Koch once postulated that the claustrum, a sheet-like neuronal structure hidden beneath the inner surface of the neocortex, might give rise to “integrated conscious percepts”–that is, act like the “seat of consciousness” (Crick and Koch, 2005)”

Further, this fundamental question is raised:

What distinguishes a neural process that leads to a conscious experience from that which does not?

This (cliffhanger) will be dedicated to its own piece where I introduce my personal theory of organic learning, discussed later in this series.

For this section, I would recommend the reader follow through the original extract subsection in its entirety, as it includes many other neurologically based evidences which are not included here that demonstrate the dubious nature of the ‘seat of consciousness’.

However, I hope the reader now has a clear understanding of where this material monist conception of mind, grounded in the brain, is headed.

The Mystery of the Engram - Neural Correlates of Memory

We now consider many oddities in the study of the neurological (and more general biological) basis of memory which seem to point toward its resistance to a physicalist reduction, further demonstrating the inadequacy of mind-brain identity. For small context (to expanded upon in the very next subsection), consider that the account of memory utilised by a mind-brain identity theory is that of spatial memory, in this case neural network pattern memory, something which we will be discussing in more technical detail (and its manifold problems) later. Further, finding a physical trace (as in the title) in the context of the spatial model of memory is looking for an engram.

The first neurological evidence contradicting the spatial memory model and the notion of finding an engram we will be discussing is based on a surgical procedure known as a hemispherectomy, which is the removal of an entire lateral brain hemisphere, or the severance of the corpus callosum which connects the left and right hemispheres. The purpose of this surgery usually is to resolve epilepsy. In the former case, that would be containing the spread of neurological spasms presenting dominantly in one hemisphere, and the other to prevent larger brain-wide events.

So, what is so special about this particular surgery? Well, fascinatingly, it seems that this surgery, in both instances, does not lead to memory impairment. Now, how would we go about explaining this in the context of the physicalist model? A first point often raised is that of neuroplasticity, that neural networks can essentially re-model themselves and re-map associative memories in a distributed fashion across the whole brain. Problematically though, this doesn’t explain how in the case of the loss of half the brain, that there is practically no information content lost at all.

It may also be posited that instead then that memory was stored in both hemispheres all along, and removal of one hemisphere does not mean that information is not retained in the other. This seems, however, to require a far more convoluted mechanism, are multiple copies of the same memory retained (insofar as one is taking the concept of a representation seriously) across both hemispheres?

Even more perplexingly, in the next section we will be discussing the case of the freshwater flatworms planaria, which can be have their head severed and regenerated (along with their brain), whilst retaining associative memories, completely destroying the notion that at least all memory has a neurological basis.

So, it seems that we have some weight of empirical evidence pointing to memory not being stored physically, do we have an alternative option?

Where are my Memories, then?

This question is itself of course, embedded within the materialist conception of spatialised memory, why ought there to be a “where” at all? This section will be a fairly short introduction to an alternative view to the materialist conception of spatial memory in the brain, and we will provide some further points of consideration as to why this new paradigm is useful. However, a full treatise will be given in an article dedicated to the Bergsonian model of mind.

Firstly, we will quickly decompose the notion of spatial memory and disambiguate it from its alternative, temporal memory. See the following graphic:

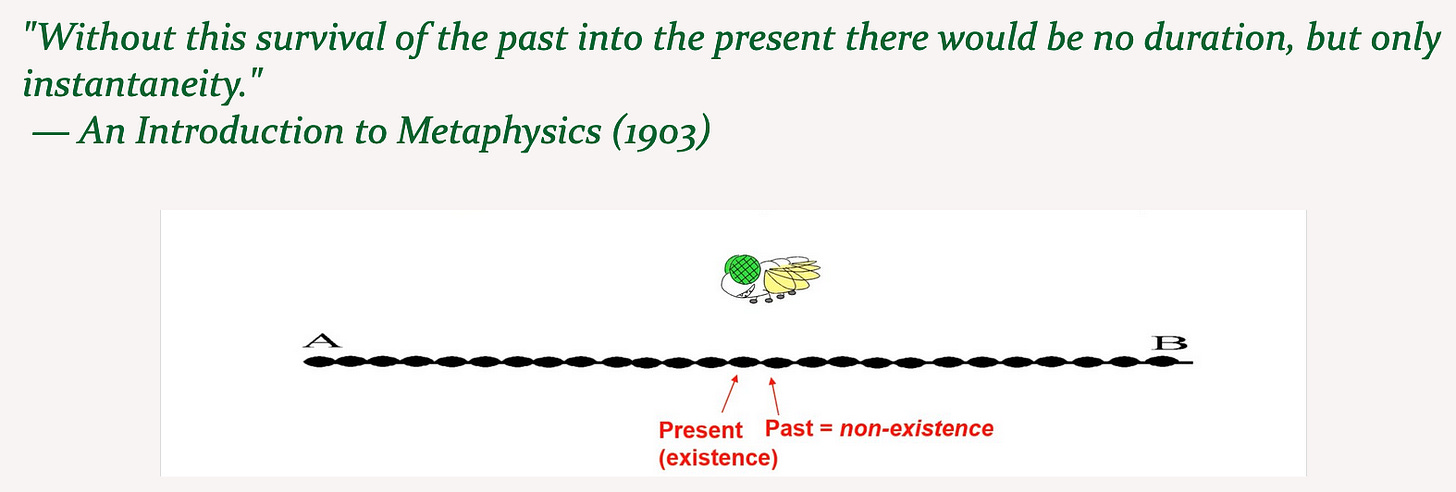

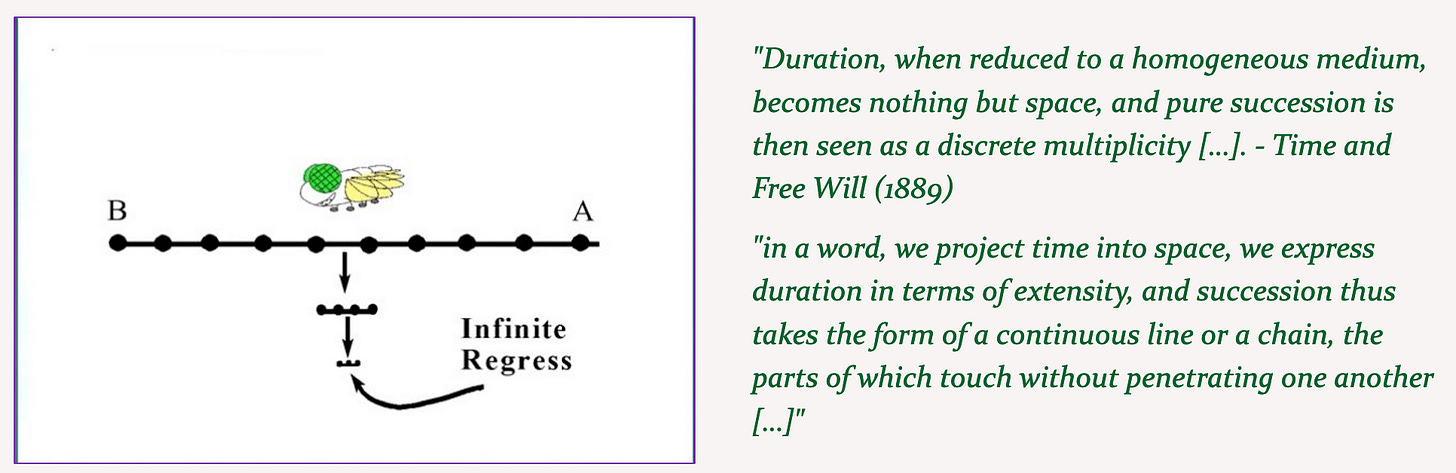

This assumption comes from an idealised and spatialised model of time (termed abstract space), a grid of mutually external points placed over the actual continuous extensity in the world (which Bergson termed duration). We can see that the past falls into non-existence, and only the present instant has actual existence (relevant to a further point below the following graphic; which includes more extracts from Time & Free Will).

Here, we can see a particularly important and prevalent assumption within all of modern biology, neuroscience and computer science, that is the action of memory as a transmission of a representation which is etched into spatially extended matter, where some form of signal or transformation causally connects any pair of instants. Since each moment of time is falling away, each instant must ‘maintain’ information about previous instants.

For example (expanding on the previous section), a species of freshwater flatworms known as planaria have been studied extensively for their self-regenerative capabilities. Even after having their head severed, conditioned associations (i.e. between an electric shock and a bright light) maintain their integrity. So, how does the (physicalist) biologist approach this heavy blow to the idea of memory being stored in the brain only? Well, they posit another material substance which will instead hold a representation of an associative map, in this instance RNA, despite limited evidence of its storage capabilities and involvement in this case. RNA is acting as the matter in the present instant holding on to the associative memory.

So what could be a potential alternative? Well, consider the following quote from Bergson:

“The present distinctly contains the ever-growing image of the past (…)”

This is the clear opposition to specially privileging the existence of the present moment. That is, processes and forms that are active are being informed continuously by the past, and the entire past is accessible to the present moment. So, in this model of time, how could memory work?

Well, we demonstrate this in some detail, to be expanded on much more in future, based on a brilliant quote by Stephen E Robbins and a visual depiction. For the quote, in place of ‘thought’ replace with ‘memory’ and the semantics are essentially equivalent.

"Thought is comprised of the simultaneous relation of dynamical patterns with virtual objects of the four-dimensional mind." - Stephen E Robbins

Now, the concept of virtuality I am attempting to leave to the side for now, as this piece is already incredibly informationally dense. However, consider in the simple case that virtuality is the ontological status of the past in contributing to the present moment. Further, we see the usage of ‘simultaneous’ and ‘dynamical’. Let us disambiguate both terms and how they inform the temporal model of memory.

Simultaneity essentially combines multiple perceptual images in a single moment of experience, something which is in principle impossible if we consider the abstract space model, where each moment of time is an instant, a singular state. This is something which we would like in the context of binding as well, as discussed prior.

Dynamical means ‘invariant over time’, which again is impossible in a scheme where there is mutual externality between any two moments of time.

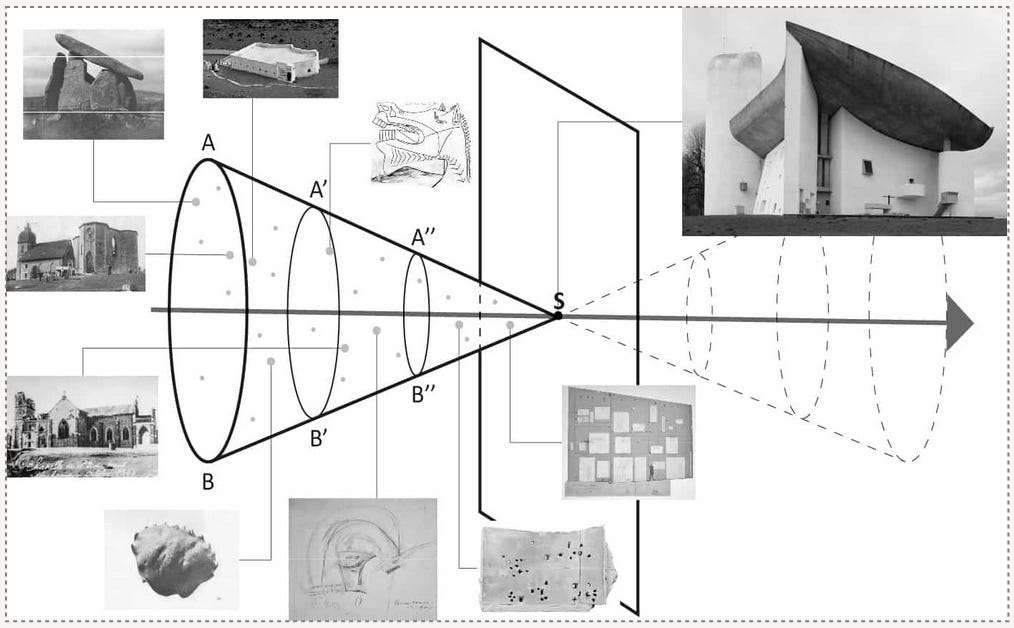

Here we see Bergson’s memory cone. This includes the present sensori-motor equilibrium S, and recollections of previous forms (A, B), (A’, B’), (A’’, B’’), which in this case are the virtual objects. Here we see that since all recollections are simultaneously available in time, there is no need to ‘reach in’ to storage and extract them. This is demonstrated in a form of cue retrieval known as redintegration, which we discuss at length in a future episode dedicated to Bergsonian theory of mind.

In fact, we see that the recall process has more to do with similarity of form. These virtual objects can be considered as temporally extended events that are defined by particular kinds of invariants, and when two events are simultaneously presented with similar sets of invariances, these are recollected together. For example, in the depiction above, the event associated with perceiving stonehenge combined with other architectural forms contributed to the combined recollection of and creation of the new architectural form in the future.

This memory cone is also a temporal model of any particular process in nature, in that it is a formative field, or a field of constrained potentials. Any process in nature can be seen in light of this, for example, a tree has total freedom to grow and flourish in the constraints of its particular form, which is governed by its past instances, allowing there to be diversity in any particular tree species whilst adhering to a general ‘schema’.

Now, an advocate for materialist memory would immediately flag this as being a stand-in explanation for something which already can be deduced from DNA. That is, the form of any particular organism is encoded in and later generated from its DNA. The latest rendition of this is termed the ‘generative model of the organism’, which is genuinely an exciting but ultimately problematic theory. However, the reader is encouraged to view this for themselves (Generative Model of the Organism).

I will provide a principled reason on the basis of form itself as to why this cannot be the case (also making reference to the formal notions provided in the generative model paper; see the section after the following), but it should also be noted that the importance of DNA in organismal form and development is heavily disputed.

Finally, there is a mode of thinking in which the cone could be considered to be constrainable, that is, only some (a particular subset) of the virtual events in the past of the temporal extent of being may contribute in the present moment. This provides the roots for a concrete realisation of James’ & Huxley’s Filter Theory previously discussed, and will be extended in a full fledged future piece on a model of the immaterial soul on this basis, and some empirical evidence will also be presented (exciting I know).

Again, as stated previously, all of the foundational concepts (including Bergson’s duration, abstract space and virtuality) will be discussed at length in a specialised piece.

Memory in Nature; Applying the Temporal Model

A deeper notion which we will be discussing in Nature of Experience 4: The Physical Dynamics of the Bergsonian Metaphysic, is that processes in nature seem to adhere to laws, they behave habitually.

This seems to be immediately obvious to us as we observe it and have internalised this as common sense, but it is possible to imagine a world in which there is less regularity, or the regularity is not self-sustained and is contingent on some other factor. So the glaring questions with respect to memory seems to be, how does a particular material know how to behave as it should? Were all physical laws ‘instantiated’ at some linear origin of the universe or did they develop and thus were mutually refined with its states? Do all possible substances exist or is there genuine novelty being introduced to this day, i.e. that physics is not causally closed?

In the context of materials science, Rupert Sheldrake’s “A New Science of Life” provides some fascinating cases of novel habituation, providing many examples, but here cited in relation to crystallography (the study of crystal anatomy, development and function). This is particularly useful as it provides an empirical basis to deduce that similar mechanisms are involved in shaping all processes and forms in nature.

Shortly, for context, I will explain the concepts of morphic resonance and morphogenetic fields so that the extract is more readable. These concepts we will be discussing far more in depth when we provide a Bergsonian interpretation and modification of Lamarckian evolutionary theory, which has recently gained some traction as it relates to epigenetics, but we will be relating it to inheritance through the temporal model of memory.

Morphic resonance is the idea that similar systems have a shared collective memory, and can use this to inform their evolutionary trajectory. This is useful for inheritance of behaviour, but also maintenance of form (key examples provided in the extract). This is equivalent to the temporal model of memory we explicated above, where we consider each process in nature as a formative field with collective memory, with each form resonating to similar past forms (and also specific events in the external field; that which we have not covered).

Morphogenetic fields are then these ‘fields of constrained potentials’ which essentially characterise a particular form, such as a plant species or chemical compound.

In the case of morphic units that have existed for a very long time – billions of years in the case of the hydrogen atom – the morphogenetic field will be so well established as to be effectively changeless. Even the fields of morphic units that originated a few decades ago may be subject to the influence of so many past systems that any increments in this influence will be too small to be detectable. But with brand-new forms, it may well be possible to detect a cumulative morphic influence experimentally.

Consider a newly synthesized organic chemical that has never existed before. According to the hypothesis of formative causation, its crystalline form will not be predictable in advance, and no morphogenetic field for this form will yet exist. But after it has been crystallized for the first time, the form of its crystals will influence subsequent crystallizations by morphic resonance, and the more often it is crystallized, the stronger should this influence become. Thus on the first occasion, the substance may not crystallize at all readily; but on subsequent occasions crystallization should occur more and more easily as increasing numbers of past crystals contribute to its morphogenetic field by morphic resonance.

In fact, chemists who have synthesized entirely new chemicals often have great difficulty in getting these substances to crystallize. But as time goes on, these substances tend to crystallize with greater and greater ease. Sometimes many years pass before crystals first appear. For example, turanose, a kind of sugar, was considered to be a liquid for decades, but after it first crystallized in the 1920s it formed crystals all over the world.[ Woodard, G.D. and McCrone, W.C. ‘Unusual crystallization behavior’. Journal of Applied Crystallography. 8 (1975), p. 342]. Even more striking are cases in which one kind of crystal appears, and is then replaced by another. For example, xylitol, a sugar alcohol used as a sweetener in chewing gum, was first prepared in 1891 and was considered to be a liquid until 1942, when a form melting at 61°C crystallized out. Several years later another form appeared, with a melting point of 94°C, and thereafter the first form could not be made again (Woodard, G.D. and McCrone, W.C. ‘Unusual crystallization behavior’. Journal of Applied Crystallography. 8 (1975), p. 342).

Crystals of the same compound that exist in different forms are called polymorphs. In many cases they can coexist, like calcite and aragonite, which are both crystalline forms of calcium carbonate, or like graphite and diamond, both crystalline forms of carbon. But sometimes, as in the case of xylitol, the appearance of a new polymorph can displace an old one. This principle is illustrated in the following account, taken from a textbook on crystallography, of the spontaneous and unexpected appearance of a new type of crystal:

“About ten years ago a company was operating a factory which grew large single crystals of ethylene diamine tartrate from solution in water. From this plant it shipped the crystals many miles to another which cut and polished them for industrial use. A year after the factory opened, the crystals in the growing tanks began to grow badly; crystals of something else adhered to them – something which grew even more rapidly. The affliction soon spread to the other factory: the cut and polished crystals acquired the malady on their surfaces …

The wanted material was anhydrous ethylene diamine tartrate, and the unwanted material turned out to be the monohydrate of that substance. During three years of research and development, and another year of manufacture, no seed of the monohydrate had formed. After that they seemed to be everywhere.” (A. Holden and P. Singer) [Holden, A. and Singer, P. Crystals and Crystal Growing. London, UK: Heinemann, 1961]

These authors suggest that on other planets, types of crystal which are common on Earth may not yet have appeared, and add: ‘Perhaps in our own world many other possible solid species are still unknown, not because their ingredients are lacking, but simply because suitable seeds have not yet put in an appearance.’ [Holden, A. and Singer, P. Crystals and Crystal Growing. London, UK: Heinemann, 1961]

Those interested in understanding a possible theoretical basis for this should read Lee Smolin’s Law of Precedence paper (Law of Precedence and Freedom); although we will be covering this in far more depth in Nature of Experience 4.

It is understood more generally that cognition must be present throughout nature, something which various revolutionary biologists studying basal cognition (discovering individual cells have extensive problem solving capabilities as an example) are coming to posit, such as Michael Levin.

More on Memory, Invariants & Form

A further issue with the spatial concept of memory is that of representation of form. Now, this will be a small treatise, since we will discuss an elaboration of Gibson’s ecological theory of perception produced by Stephen E Robbins, which will make this incredibly clear (hopefully) for the reader.

Now, what exactly is meant by ‘form’? This follows neatly from points above about perception being a ‘unity in diversity’ and equally a ‘diversity in a unity’. The form of any perceptual object is represented both analytically (in terms of its composite parts as a representation; as we will come to see in the future as a Bergsonian instant) and intuitively (in terms of its indivisible wholeness; which will come to see in the future as within a Bergsonian duration).

For example, let us consider the perceptual event of seeing a Christmas present under the tree. This event is temporally extended, and we are able to analyse a particular instant in this event (although never to the null zero-time instant; an idealised notion) and particularise (represent) the present as composed of sides, vertices, and faces (assuming a mathematical analysis which rids our present of the beautiful colours and intricacies of the wrapping).

Now, once this analysis has taken place, we have performed a particular reduction known as an abstraction. This fact alone, the fact that we can abstract like this, demonstrates the problem with computation more generally, but again (and I am very sorry to the reader for these continual cliffhangers) we will discuss this in more depth when we cover Bergson fully.

So now, it is impossible for us to recover the original perceptual event from this abstraction, and we can only do this through attempting to relate some future idealised instant in the event to this abstraction, thus we have obtained a series of mutually external instants, the parts (representations) which make up this event.

However, without an understanding of the whole, it would be impossible to meaningfully relate these instants together (whether this be causally or otherwise as they are involved in the event). The whole provides a necessary precondition for this compartmentalisation. Further, the parts are necessary to distinguish different perceptual events. Thus we have a part-whole, whole-part duality between percepts and their representations.

As a small aside, this concept of part-whole, whole-part duality in perception seems to have some fascinating links to Iain McGilchrist’s work on hemispheric function, with the left hemisphere generally associated with reduction and abstraction (analysis), and the right hemisphere with mirroring and coinciding with a perceptual whole ‘as it is’ (intuition). I hope to cover his seminal popular book ‘Master and his Emissary’ as it relates to this topic in the future.

Now, let us consider the academic standard mathematical model of memory in the brain, that is of neural architectures which are sensitive to pattern reactivations, instead of the very simplistic model of computerised ‘file cabinets’.

We will use a Hopfield network as a key example, since its associative memory architecture actually does coincide with a temporal model of memory (as we will see; to some degree, as it does not considers time discretely) but it does not suffice for capturing dynamical events defined by invariants.

Without drowning in technicalities (here is a paper that delves into these Hopfield Networks is All You Need), which we will hopefully go into on our other page when we delve into deep learning, consider this network to capture a set of patterns. These patterns are particular states of the network, pattern vectors defined by binary values (stores whether each neuron is on or off). Now, of course, this is much closer to what we need, since it is a dynamical system (evolves over time) and its memory is considered in terms of associations and similarity, i.e. pattern X recovers pattern X’, due to similarity between patterns X and X’.

Memory is then a ‘metastable’ system which connects the state of multiple networks over multiple time steps defined by their pattern vectors, where each pattern vector is connected to a particular time step. This then is still connectionist associativity, and we consider how this is problematic for an event that is defined by dynamical invariants, and not static features.

Now, I am hypothesising (on the basis of Gibson’s ecological perception theory and its extension by Stephen E Robbins - to be far expanded upon in future articles) that a perceptual event such as coffee stirring cannot be captured by static features and connectionism. That is, if we capture some set of features X of event A (coffee stirring at home), and some features X’ of event A’ (coffee stirring at Costa), and associate these so that each static feature x of X connects some static feature x’ of X’, then we will not be able to recover the function of memory in human beings.

Now, why is this? Consider the following two examples as a sort of introduction. First of all, we have the problem that has its root in linguistics, that is of polysemy and homonymy. Polysemy is the capacity for some sign to have multiple associated meanings, for example paper having multiple linguistic functions (i.e. paper for writing, the content of an academic publication, a newspaper and so forth). Homonymy, alternatively, is the capacity for multiple word forms to be attached to the same meaning.

Now, in the context of any connectionist network (neural network above), we have a set of paired associates (as described in terms of coffee stirring events). However, we must first attach the specific semantics to the network before we can then follow through to its paired associate. Thus we constrain signs and meanings to a 1-1 relationship, despite the fact that this is not a feature of human language (as described above).

In terms of how this relates to our coffee stirring events, we must consider that each static feature of the event we have encoded as a distinct pattern vector (or just some representation) is now effectively paired with another feature X’. But now, we will have event interference, since each feature of event X is plausibly related to many other similar events that are not just X’, making recall very non-specific and dysfunctional. Further consider, how are the semantics attached in the first place to such a system without an external ‘semantic agent’ to reduce such an event in the first place, if features of our event representations are inherently polysemic and homonymic?

However, let us instead consider the indivisible dynamics that differentiate our events X and X’. In this case, we have different acoustical invariants, the visual form of the cups, the table they rest on, and so on. Thus we can make memory reasonably specific by immediately analogising the new event X’ with all prior events (including X) that have similar dynamics. Of course, the very specific physical details of these invariants has not been considered, and the reader should note these simply are ‘indivisible’ over time, and I will leave you with this video for when we dedicate an article to this:

For further detail on how this works if the reader does not want to consult the academic paper, because in of itself it is really interesting, is the following YouTube video:

Then, it seems that the path toward understanding the mind that does not situate itself entirely in mind-brain identity and the spatial model of memory, is slightly more clear. The expansion on this topic is probably the most important theme in this entire series, so stay tuned for this!

Conclusion

Overall, we have clearly dissected the metaphysical roots of the current model of Mind, that of a brain-based epiphenomenon as a result of information integration and binding in neural networks, based on a discrete temporal model of memory, which essentially is the spatial account. We have also demonstrated its potential alternative, based on the temporal model of memory and Filter Theory. This particular revolutionary model of the soul will be treated much more at length following our next articles more deeply dissecting the Bergsonian metaphysic, so I hope the reader is excited for this!

Unity.